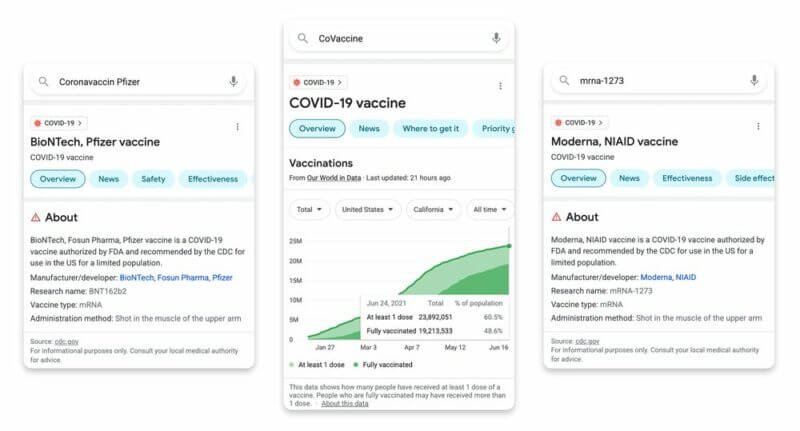

In its first application, Google’s Multitask Unified Model (MUM) technology enabled the search engine to identify more than 800 variations of vaccine names in over 50 languages in a matter of seconds, the company announced in a blog post on Tuesday.

What is MUM? First announced at Google I/O, MUM is built on a transformer architecture, like BERT, but is touted as being 1,000 times more powerful and is capable of multitasking to connect information for users in new ways (such as the aforementioned identification and matching of vaccine names across languages).

During its unveiling, Google SVP Prabhakar Raghavan provided the following as examples of tasks that MUM can perform simultaneously:

- Understand and generate language.

- Train across 75 languages.

- Understand multiple modalities (enabling it to understand multiple forms of information, like images, text and video).

Why we care. This is our first glimpse of MUM in the wild and it provides us with a better idea of its real-world use cases. In this implementation, MUM’s language capabilities were on display, but we did not get to see its multimodal capabilities, which were highlighted during the announcement at I/O.

Google is saying that MUM achieved this task in a matter of seconds when it otherwise might have taken weeks. While its impact here is subtle, users are getting more relevant results, which will help Google maintain its position as the market leader. If Google can deliver on this technology, the way it was advertised during its unveiling, it may enable users to search in ways that they previously thought were too complex for search engines to understand. Even if it doesn’t have as splashy an impact, the efficiency displayed here will still provide Google with another advantage over competitors.

This story first appeared on Search Engine Land.

The post Google’s MUM identifies 800 variations of vaccine names across 50 languages in seconds appeared first on MarTech.